The potential of AI in healthcare has the dazzling shine of a good Hollywood production. Its demos are spectacular; its promise, bold and gigantic. However, the best benefits that AI has to offer will come from the fields of management. Transformations in other areas will take years to arrive, if they ever do.

One of the first applications in the history of Artificial Intelligence was called Eliza. It was developed by MIT and consisted of a simulator of the therapeutic corpus created by famous psychiatrist Carl Rogers.

Eliza was a primitive version of what we now know as chatbots. It was developed in 1964 (indeed, 70 years before ChatGPT was born). The source code and idea belong to Joseph Keizenbaum, one of the fathers of AI.

Keizenbaum decided to permanently cancel development of Eliza in 1967 after seeing people’s reactions when they talked with Eliza.

What moved the MIT researcher to cancel his project was not the risk of using Eliza in a real therapeutic setting. Eliza made pertinent questions and offered more or less reasonable advice. No, what truly frightened the creator of Eliza was how its first users attributed human qualities to the software: they started to care for it. Weizman realized this one day when his own secretary asked him to leave the office because she was going to talk to Elize about personal matters.

ChatGPT

Over the last ten months, the world has had a similar experience to Eliza’s beta testers. We have admired the capabilities of language models like ChatGPT. We have asked it to help us write a text. We have sought advice on a decision that we had to make in our day-to-day work life.

Yes, ChatGPT impresses us.

But it also scares us. Because it is capable of ratifying completely false statements with the assertiveness of an expert. ChatGPT is like a wild horse: it has unbridled energy, and is incapable of carrying a rider safely.

This is being proven by institutions that wish to use chatbots to train their employees or to inform their customers rigorously. Language models born in 2023 take a long time to tame. And they never end up being completely ready to compete in a horse show without making mistakes.

AI that is ready

AI’s biggest benefits are hidden in its back office.

The use of AI that can truly lead to a productivity boom in healthcare professionals and, consequently, improve patient care is hidden in the routine tasks of their everyday work.

It is the least attractive version of AI, but it is already capable of taking on the following tasks and completing them with a tolerable error rate. I am referring to tasks like…

- Filling forms and adding them to databases

- Sending appointments

- Transcribing and summarizing conversations

- Writing reports

- Recognizing the most challenging of handwritings (dear doctors…!)

- Planning resources

- Analyzing results

- and a long list of others

All of it under the supervision of professionals, who will, for now, have the last word.

With the right incentives, both healthcare workers and companies that embrace AI in its most boring processes will be opening a window to a future that is less costly, more efficient and more capable than today.

AI that isn’t ready

And what can we venture about the Elizas of the future, those capable of understanding patients, giving diagnoses and prescribing health decisions?

In all aspects of AI development there are maturing phases that range from the initial human-supervised training until the level of autonomous performance where they are able to outperform humans.

In the field of self-driving vehicles, for instance, we have been told for over ten years that complete autonomy, something technically known as Level 5, will come in one or two years. Reality is more stubborn. This is why there are no self-driving cars on our streets and highways, and no one in the world is able to say for sure when we will see them.

In these types of developments –autonomous cars, new treatments, etc.– there is a final 2% or 3% of optimization that is extremely costly to reach. And it might possibly never be achieved.

Let’s circle back to the field of medicine.

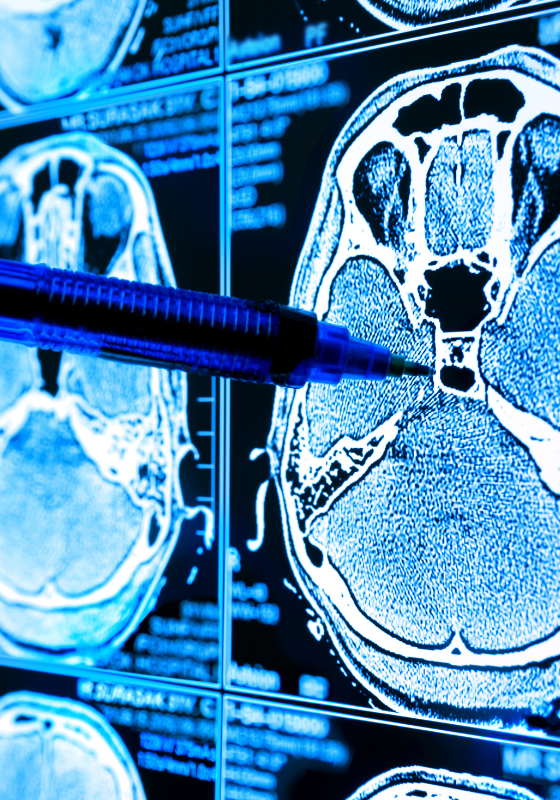

There are aspects of medical diagnosis in which AI can be more precise than humans. This is the case, for example, in the detection of certain types of cancer. Google Deep Mind, a model targeted towards analyzing large volumes of data, is capable of revealing tumors that professionals cannot see.

But even more promising projects in healthcare, like the acceleration in the development of new treatments, are at an unpredictable distance in time. According to investment bank Morgan Stanley, Big Pharmaceuticals will invest 50 billion dollars in the upcoming 5 years to achieve it. Will they succeed? It is possible, but we do not know.

Regarding the promise of a virtual doctor, we are probably 3 to 5 years away from the appearance of chatbots with professional judgment and a level of knowledge superior to that of human beings. Healthcare professionals and medical committees will be the first to use them as an aid in decision making. It is easy to think that later on, with the right supervision from authorities, AI-based second opinion services will emerge for patient use.

These are cases of AI that awe us in their demo versions.

But it is in the field of management where, in my opinion, it is more sensible, beneficial and urgent to make decisions to improve healthcare.

That is where AI will truly keep its promises.

Gustavo Entrala Experto en Estrategias de Innovación, consejero de empresas y senior Advisor de LLYC